| Title: Benchmarking compilation time with ccache/mfs on OpenBSD

Author: Solène

Date: 18 September 2021

Tags: openbsd benchmark

Description:

# Introduction

I always wondered how to make packages building faster. There are at

least two easy tricks available: storing temporary data into RAM and

caching build objects.

Caching build objects can be done with ccache, it will intercept cc and

c++ calls (the programs compiling C/C++ files) and depending on the

inputs will reuse a previously built object if available or build

normally and store the result for potential next reuse. It has nearly

no use when you build software only once because it requires objects to

be cached before being useful. It obviously doesn't work for non C/C++

programs.

The other trick is using a temporary filesystem stored in memory (RAM),

on OpenBSD we will use mfs but on Linux or FreeBSD you could use tmpfs.

The difference between those two is mfs will reserve the given memory

usage while tmpfs is faster and won't reserve the memory of its

filesystem (which has pros and cons).

So, I decided to measure the build time of the Gemini browser Lagrange

in three cases: without ccache, with ccache but first build so it

doesn't have any cached objects and with ccache with objects in it. I

did these three tests multiple time because I also wanted to measure

the impact of using memory base filesystem or the old spinning disk

drive in my computer, this made a lot of tests because I tried with

ccache on mfs and package build objects (later referenced as pobj) on

mfs, then one on hdd and the other on mfs and so on.

To proceed, I compiled net/lagrange using dpb after cleaning the

lagrange package generated everytime. Using dpb made measurement a lot

easier and the setup was reliable. It added some overhead when

checking dependencies (that were already installed in the chroot) but

the point was to compare the time difference between various tweaks.

# Results numbers

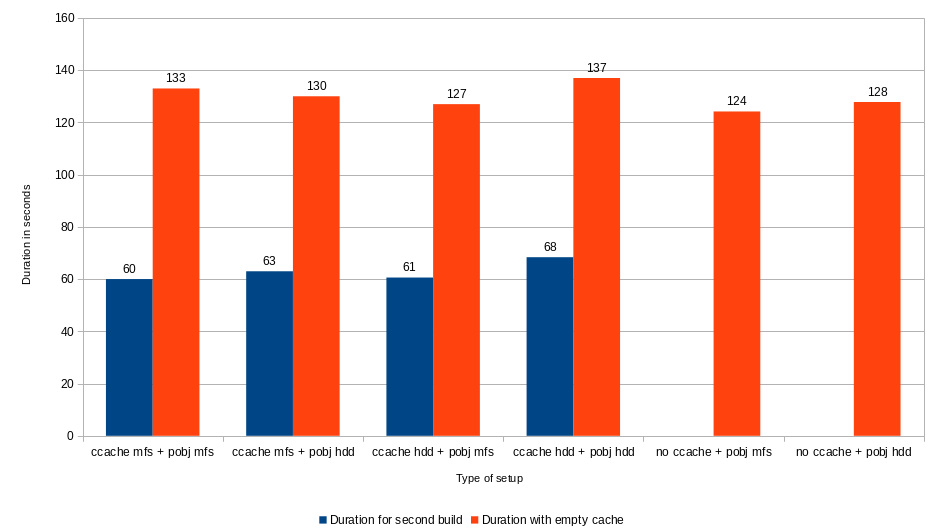

Here are the results, raw and with a graphical view. I did run

multiples time the same test sometimes to see if the result dispersion

was huge, but it was reliable at +/- 1 second.

```Raw results

Type Duration for second build Duration with empty cache

ccache mfs + pobj mfs 60 133

ccache mfs + pobj hdd 63 130

ccache hdd + pobj mfs 61 127

ccache hdd + pobj hdd 68 137

no ccache + pobj mfs 124

no ccache + pobj hdd 128

```

|

|

# Results analysis

At first glance, we can see that not using ccache results in builds a

bit faster, so ccache definitely has a very small performance impact

when there is no cached objects.

Then, we can see results are really tied together, except for the

ccache and pobj both on the hdd which is the slowest combination by far

compared to the others times differences.

# Problems encountered

My building system has 16 GB of memory and 4 cores, I want builds to be

as fast as possible so I use the 4 cores, for some programs using Rust

for compilation (like Firefox), more than 8 GB of memory (4x 2GB) is

required because of Rust and I need to keep a lot of memory available.

I tried to build it once with 10GB of mfs filesystem but when packaging

it did reach the filesystem limit and fail, it also swapped during the

build process.

When using a 8GB mfs for pobj, I've been hitting the limit which

induced build failures, building four ports in parallel can take some

disk space, especially at package time when it copies the result. It's

not always easy to store everything in memory.

I decided to go with a 3 GB ccache over MFS and keep the pobj on the

hdd.

I had no spare SSD to add an SSD to the list. :(

# Conclusion

Using mfs for at least ccache or pobj but not necessarily both is

beneficial. I would recommend using ccache in mfs because the memory

required to store it is only 1 or 2 GB for regular builds while storing

the pobj in mfs could requires a few dozen gigabytes of memory (I think

chromium requires 30 or 40 GB last time I tried). |